This tutorial will guide you through building a SwiftUI app that scans and recognizes printed text using the iPhone’s camera. Leveraging…

Building a SwiftUI App for Scanning Text Using the Camera

This tutorial will guide you through building a SwiftUI app that scans and recognizes printed text using the iPhone’s camera. Leveraging AVFoundation for camera management and the Vision framework for Optical Character Recognition (OCR), this app enhances accessibility and user interaction by digitizing any printed text.

Prerequisites

Before starting, ensure you have:

• Xcode installed on your Mac.

• Basic familiarity with SwiftUI.

• An understanding of handling permissions in iOS apps.

Step 1: Configuring App Permissions

To use the camera, your app must request permission from the user, which involves updating the Info.plist file.

Update Info.plist:

Add the following key-value pair to your Info.plist file:

• Key: NSCameraUsageDescription

• Value: “This app requires camera access to scan text.”

This description will appear to the user when the app first requests camera access.

Step 2: Setting Up the Credit Card Camera View

Create a UIViewControllerRepresentable to integrate UIKit’s camera functionality within your SwiftUI interface.

Code Snippet:

import SwiftUI

import AVFoundation

import Vision

struct CreditCardScannerView: UIViewControllerRepresentable {

var onCreditCardNumberDetected: (String) -> Void

func makeCoordinator() -> Coordinator {

return Coordinator(self)

}

func makeUIViewController(context: Context) -> UIViewController {

let viewController = UIViewController()

let captureSession = AVCaptureSession()

captureSession.sessionPreset = .photo

guard let videoCaptureDevice = AVCaptureDevice.default(.builtInWideAngleCamera, for: .video, position: .back) else { return viewController }

let videoInput: AVCaptureDeviceInput

do {

videoInput = try AVCaptureDeviceInput(device: videoCaptureDevice)

} catch {

return viewController

}

if (captureSession.canAddInput(videoInput)) {

captureSession.addInput(videoInput)

} else {

return viewController

}

let videoOutput = AVCaptureVideoDataOutput()

videoOutput.setSampleBufferDelegate(context.coordinator, queue: DispatchQueue(label: "videoQueue"))

if (captureSession.canAddOutput(videoOutput)) {

captureSession.addOutput(videoOutput)

} else {

return viewController

}

let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

previewLayer.frame = viewController.view.layer.bounds

previewLayer.videoGravity = .resizeAspectFill

viewController.view.layer.addSublayer(previewLayer)

DispatchQueue.global(qos: .userInitiated).async {

captureSession.startRunning()

}

return viewController

}

func updateUIViewController(_ uiViewController: UIViewController, context: Context) {}

}

• UIViewControllerRepresentable: This protocol allows us to use a UIKit view controller in SwiftUI.

• Coordinator: The Coordinator class manages the AVCaptureVideoDataOutput and Vision framework tasks.

• AVCaptureSession: Manages the flow of data from the camera. Here, we configure it with a .photo preset for still images, though the video feed is being processed.

• AVCaptureDeviceInput: Represents the camera input, which is added to the session.

• AVCaptureVideoDataOutput: Captures video data, delegating frame processing to our coordinator.

• AVCaptureVideoPreviewLayer: Displays the camera feed on screen.

Step 3: Coordinating Camera Output with Vision

The Coordinator class is where we integrate AVFoundation with Vision to process the camera feed and extract the credit card number.

Code Snippet:

class Coordinator: NSObject, AVCaptureVideoDataOutputSampleBufferDelegate {

var parent: CreditCardScannerView

var visionRequest = [VNRequest]()

init(_ parent: CreditCardScannerView) {

self.parent = parent

super.init()

setupVision()

}

func setupVision() {

let textRequest = VNRecognizeTextRequest(completionHandler: self.handleDetectedText)

self.visionRequest = [textRequest]

}

func handleDetectedText(request: VNRequest?, error: Error?) {

guard let observations = request?.results as? [VNRecognizedTextObservation] else { return }

var creditCardNumber: String?

for observation in observations {

guard let candidate = observation.topCandidates(1).first else { continue }

let text = candidate.string.replacingOccurrences(of: " ", with: "")

print(text)

if text.count == 16, CharacterSet.decimalDigits.isSuperset(of: CharacterSet(charactersIn: text)) {

creditCardNumber = text

break

}

}

if let creditCardNumber = creditCardNumber {

DispatchQueue.main.async {

self.parent.onCreditCardNumberDetected(creditCardNumber)

}

}

}

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

let pixelBuffer: CVPixelBuffer? = CMSampleBufferGetImageBuffer(sampleBuffer)

var requestOptions:[VNImageOption: Any] = [:]

if let cameraData = CMGetAttachment(sampleBuffer, key: kCMSampleBufferAttachmentKey_CameraIntrinsicMatrix, attachmentModeOut: nil) {

requestOptions = [.cameraIntrinsics: cameraData]

}

guard let pixelBuffer = pixelBuffer else { return }

let imageRequestHandler = VNImageRequestHandler(cvPixelBuffer: pixelBuffer, orientation: .right, options: requestOptions)

do {

try imageRequestHandler.perform(self.visionRequest)

} catch {

print(error)

}

}

}

• setupVision(): Initializes the Vision request. We use VNRecognizeTextRequest to detect and extract text from the camera feed.

• handleDetectedText(): Processes the results from the Vision request. It filters out the detected text to identify a valid 16-digit credit card number.

• captureOutput(): Captures the video frames and feeds them into Vision’s VNImageRequestHandler for processing.

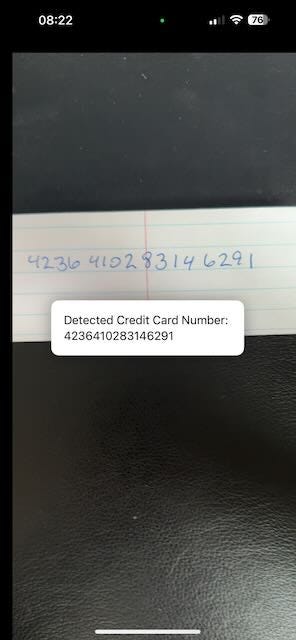

Step 4: Displaying Recognized Text

Incorporate a TextView in your SwiftUI layout to show the recognized text.

Code Snippet:

import SwiftUI

struct CameraScanView: View {

@State private var creditCardNumber: String = ""

var body: some View {

ZStack {

CreditCardScannerView { cardNumber in

self.creditCardNumber = cardNumber

}

.edgesIgnoringSafeArea(.all)

if !creditCardNumber.isEmpty {

Text("Detected Credit Card Number: \(creditCardNumber)")

.padding()

.background(Color.white)

.foregroundStyle(.black)

.cornerRadius(10)

.shadow(radius: 10)

.padding()

}

}

}

}

• State Management: The creditCardNumber is a @State variable that holds the detected card number.

• ZStack: We use ZStack to layer the camera view behind the detected credit card number display.

• Conditional Display: The detected card number is displayed only when it is not empty.

Conclusion

With this setup, you’ve created a simple yet powerful credit card scanner in SwiftUI. By combining AVFoundation and Vision, this component efficiently captures and processes live video feed to detect credit card numbers. This pattern can be extended for various text recognition tasks, giving you a versatile tool in your SwiftUI arsenal.

If you want to learn more about native mobile development, you can check out the other articles I have written here: https://medium.com/@wesleymatlock

🚀 Happy coding! 🚀

By Wesley Matlock on May 30, 2024.

Exported from Medium on May 10, 2025.